JupyterLab Installation and Configuration

You can use JupyterLab to access HeavyDB.

Installing Jupyter with HeavyDB

Install the NVIDIA drivers and nvidia-container-runtime for your operating system, using the instructions at https://github.com/NVIDIA/nvidia-container-runtime.

For Apt-based installations, such as Ubuntu, use the Docker preparation instructions.

Change the default Docker runtime to

nvidiaand restart Docker.a. Edit

/etc/docker/daemon.jsonand add"default-runtime": "nvidia". The resulting file should look similar to the following:{ "default-runtime": "nvidia", "runtimes": { "nvidia": { "path": "nvidia-container-runtime", "runtimeArgs": [] } } }b. Restart the Docker service:

sudo systemctl restart dockerc. Validate NVIDIA Docker:

docker run --rm nvidia/cudagl:11.0-runtime-ubuntu18.04 nvidia-smiCreate an HEAVY.AI storage directory with a name of your choosing and change directories to it.

sudo mkdir /var/lib/heavyai cd /var/lib/heavyaiCreate the directory /var/lib/heavyai/jupyter/.

sudo mkdir /var/lib/heavyai/jupyterCreate the file

/var/lib/heavyai/heavyai.conf, and configure thejupyter-urlsetting under the[web]section to point to the Jupyter service:[web] jupyter-url = "http://jupyterhub:8000" servers-json = "/heavyai-storage/servers.json"Create the file

/var/lib/heavyai/data/heavyai.license. Copy your license key from the registration email message. If you have not received your license key, contact your Sales Representative or register for your 30-day trial here.Create the following

/var/lib/heavyai/servers.jsonentry to enable Jupyter features in Immerse.[ { "enableJupyter": true } ]Create

/var/lib/heavyai/docker-compose.yml.

version: '3.7'

services:

heavyaiserver:

container_name: heavyaiserver

image: heavyai/heavyai-ee-cuda:v6.0.0

restart: always

ipc: shareable

volumes:

- /var/lib/heavyai:/heavyai-storage

- /var/lib/heavyai/data/heavyai_import/jhub_heavyai_dropbox:/jhub_heavyai_dropbox

networks:

- heavyai-frontend

- heavyai-backend

ports:

- "6273:6273"

- "6274:6274"

- "6278:6278"

# If using binary encryption, uncomment the below line to override the default

# command that uses startheavyai, noting that you must have an existing HEAVY.AI

# data directory and run initdb before making this change.

# command: /bin/bash -c "/heavyai/bin/heavy_web_server --config /heavyai-storage/heavyai.conf & /heavyai/bin/heavydb --config /heavyai-storage/heavyai.conf"

# The purpose of this is to make sure the jlabimage is pulled because jupyterhub will not pull it automatically when launching

jupyterlab-tmp:

image: &jlabimage omnisci/omnisci-jupyterlab-cpu:v0.5

command: echo

networks:

- heavyai-backend

jupyterhub:

container_name: jupyterhub

image: omnisci/omnisci-jupyterhub:v0.4

restart: always

networks:

- heavyai-backend

depends_on:

- heavyaiserver

volumes:

- /var/run/docker.sock:/var/run/docker.sock

# Map this volume if binary encryption mode is configured and certificates are being validated

# - /var/lib/heavyai/cacerts.crt:/heavyai-storage/cacerts.crt

environment:

### Required settings ###

DOCKER_JUPYTER_LAB_IMAGE: *jlabimage

HEAVYAI_HOST: heavyaiserver

HEAVYAI_JUPYTER_ROLE: heavyai_jupyter

### Optional settings ###

# DOCKER_NOTEBOOK_DIR: /home/jovyan # The directory inside the user's Jupyter Lab container to mount the user volume to.

# HUB_IP: jupyterhub # The hostname or IP of the Jupyter Hub server

# JHUB_BASE_URL: /jupyter/ # The base URL prepended to all Jupyter Hub and Lab requests

# HEAVYAI_ALLOW_SUPERUSER_ROLE: "false" # Enable / disable admin access to Jupyter Hub

# JLAB_CONTAINER_AUTOREMOVE: "true" # Enable / disable automatic removal of stopped Juptyer Lab containers

# JLAB_DOCKER_NETWORK_NAME: heavyai-backend # The docker network name for Jupyter Lab containers

# JLAB_IDLE_TIMEOUT: 3600 # Shut down Jupyter Lab containers after this many seconds of idle time

# JLAB_NAME_PREFIX: jupyterlab # Container name prefix for Lab containers

# JLAB_HEAVYAI_IMPORT_VOLUME_PATH: /var/lib/heavyai/data/heavyai_import/jhub_heavyai_dropbox # Local Docker host path for where to mount the shared directory available to the HeavyDB server for file ingest

# JLAB_NOTEBOOK_TERMINALS_ENBLED: "false" # Enable terminals in notebooks

# JLAB_USER_VOLUME_PATH: /var/lib/heavyai/jupyter/ # Local Docker host path to be used for user Jupyter Lab home directory volume mapping

# JUPYTER_DEBUG: "true" # Turn on / off debugging for Jupyter Hub and Lab

# HEAVYAI_BINARY_TLS_CACERTS_LOCAL_PATH: /var/lib/heavyai/cacerts.crt # Specifying this or mapping a volume in the Hub container to /heavyai-storage/cacerts.crt will automatically enable binary TLS mode

# HEAVYAI_BINARY_TLS_VALIDATE: "false" # Whether or not to validate certificates in binary TLS mode. Specifying either "true" or "false" will enable binary TLS mode

# HEAVYAI_PORT: 6278 # Port that Jupyter Hub should use to connect to HEAVY.AI. Ensure this matches the protocol

# HEAVYAI_PROTOCOL: http # Protocol that Jupyter Hub should use to connect to HEAVY.AI. Ensure this is "binary" if using binary encryption

# SPAWNER_CPU_LIMIT: 1 # Number of CPU cores available for each Jupyter Lab container, see https://jupyterhub.readthedocs.io/en/stable/api/spawner.html#jupyterhub.spawner.Spawner.cpu_limit

# SPAWNER_RAM_LIMIT: 10G # Amount of CPU RAM available for each Jupyter Lab container, see https://jupyterhub.readthedocs.io/en/stable/api/spawner.html#jupyterhub.spawner.LocalProcessSpawner.mem_limit

# SPAWNER_ENV_HEAVYAI_HOST: heavyaiserver # Hostname / IP address of the HEAVY.AI server for Lab containers to connect to by default

# SPAWNER_ENV_HEAVYAI_PORT: 6274 # Port of the HEAVY.AI server for Lab containers to connect to by default

# SPAWNER_ENV_HEAVYAI_PROTOCOL: binary # Protocol of the HEAVY.AI server for Lab containers to connect to by default

# HEAVYAI_DB_URL: "heavyai://heavyaiserver:6274/heavyai" # Alternative, direct connection (not Immerse session) to heavyaiserver. Username will be inferred, but password will be required in notebook.

# More volumes for lab containers:

# JLAB_VOLUME_1: /data1:/data1:rw

# JLAB_VOLUME_2: /var/lib/heavyai:/heavyai-storage:ro

networks:

heavyai-frontend:

driver: bridge

name: heavyai-frontend

heavyai-backend:

driver: bridge

name: heavyai-backend11. Run docker-compose pull to download the images from Docker Hub. Optional when you specify a version in docker-compose.yml.

12. Make sure you are in the directory that you created in step 5, and run compose in detached mode:

docker-compose up -dcompose restarts by default whenever stopped.

13. Log in as the super user (admin/HyperInteractive).

14. Create required users in HeavyDB.

15. Create the heavyai_jupyter role in HeavyDB:

CREATE ROLE heavyai_jupyter16. Grant the heavyai_jupyter role to users who require Jupyter access:

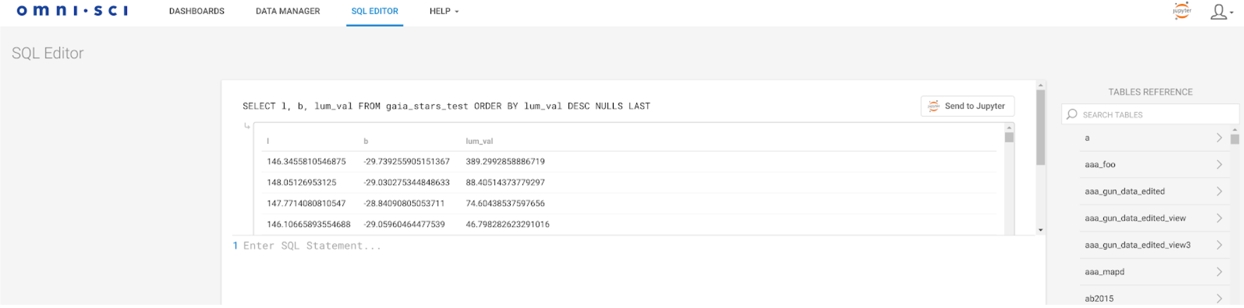

GRANT heavyai_jupyter TO usernameYou should now see Jupyter icons in the upper right of Immerse and when running queries in SQL Editor.

Adding Jupyter to an Existing HeavyDB Instance

To use Jupyter with an existing, non-Docker install of HEAVY.AI, change HEAVY.AI to run on Docker instead of the host. The steps are the same as the install instructions, with the following exceptions:

Change the volume mappings to point to your existing installation path:

heavyaiserver: container_name: heavyaiserver image: heavyai/heavyai-ee-cuda:v6.0.0 restart: always ipc: shareable volumes: - /var/lib/heavyai:/heavyai-storage - /var/lib/heavyai/data/heavyai_import/jhub_heavyai_dropbox:/jhub_heavyai_dropboxEnable the following environment variables and change the relevant paths to your existing installation:

### Optional settings ### # DOCKER_NOTEBOOK_DIR: /home/jovyan # The directory inside the user's Jupyter Lab container to mount the user volume to. # HUB_IP: jupyterhub # The hostname or IP of the Jupyter Hub server # JHUB_BASE_URL: /jupyter/ # The base URL prepended to all Jupyter Hub and Lab requests # JHUB_ENABLE_ADMIN_ACCESS: "false" # Enable / disable admin access to Jupyter Hub # JLAB_DOCKER_NETWORK_NAME: heavyai-backend # The docker network name for Jupyter Lab containers # JLAB_IDLE_TIMEOUT: 3600 # Shut down Jupyter Lab containers after this many seconds of idle time # JLAB_NAME_PREFIX: jupyterlab # Container name prefix for Lab containers JLAB_HEAVYAI_IMPORT_VOLUME_PATH: /var/lib/heavyai/data/heavyai_import/jhub_heavyai_dropbox # Local Docker host path for where to mount the shared directory available to the HeavyDB server for file ingest # JLAB_NOTEBOOK_TERMINALS_ENBLED: "false" # Enable terminals in notebooks JLAB_USER_VOLUME_PATH: /var/lib/heavyai/jupyter/ # Local Docker host path to be used for user Jupyter Lab home directory volume mapping # JUPYTER_DEBUG: "true" # Turn on / off debugging for Jupyter Hub and Lab # HEAVYDB_CONTAINER_NAME: heavyaiserver # HeavyDB container name for IPC sharing with Lab containers # SPAWNER_CPU_LIMIT: 1 # Number of CPU cores available for each Jupyter Lab container, see https://jupyterhub.readthedocs.io/en/stable/api/spawner.html#jupyterhub.spawner.Spawner.cpu_limit # SPAWNER_RAM_LIMIT: 10G # Amount of CPU RAM available for each Jupyter Lab container, see https://jupyterhub.readthedocs.io/en/stable/api/spawner.html#jupyterhub.spawner.LocalProcessSpawner.mem_limit # SPAWNER_ENV_HEAVYAI_HOST: heavyaiserver # Hostname / IP address of the HEAVY.AI server for Lab containers to connect to by default # SPAWNER_ENV_HEAVYAI_PORT: 6274 # Port of the HEAVY.AI server for Lab containers to connect to by default # SPAWNER_ENV_HEAVYAI_PROTOCOL: binary # Protocol of the HEAVY.AI server for Lab containers to connect to by defaultIf you have an existing heavyai.conf file:

Add the required sections instead of creating a new file:

[web] jupyter-url = "http://jupyterhub:8000" servers-json = "/heavyai-storage/servers.json"Remove the data, port, http-port, and frontend properties, which should not be changed with a Docker installation.

Ensure that all paths, such as cert and key, are accessible by Docker.

If you have an existing servers.json file, move it to your HEAVY.AI home directory (/var/lib/heavyai by default) and add the following key/value pair:

"enableJupyter": true

Creating the jhub_heavyai_dropbox Directory

Run the following commands to create the jhub_heavai_dropbox directory and make it writeable by your users. Change the volume mappings to point to your existing installation path:

sudo mkdir /var/lib/heavyai/data/heavai_import/jhub_heavai_dropbox

sudo chown 1000 /var/lib/heavyai/data/heavai_import/jhub_heavai_dropbox

sudo chmod 750 /var/lib/heavyai/data/heavai_import/jhub_heavai_dropboxThis allows Jupyter users to write files into /home/jovyan/jhub_heavai_dropbox/. You can also use that directory path in COPY FROM SQL commands.

Upgrading docker-compose Services

To upgrade Jupyter images using the docker-compose.yml file, edit docker-compose.yml as follows:

services:

heavyaiserver:

...

image: heavyai/heavyai-ee-cuda:v6.0.0

...

jupyterhub:

container_name: jupyterhub

image: omnisci/omnisci-jupyterhub:v0.4

...

environment:

...

DOCKER_JUPYTER_LAB_IMAGE: &jlabimage omnisci/omnisci-jupyterlab-cpu:v0.5Then, use the following commands to download the images and restart the services with the new versions:

docker-compose pull

docker-compose up -dYou might also need to stop lab containers in to start them again in a new image:

docker ps | grep jupyterlabFor each user, run the following command:

docker rm -f jupyterlab-<username>Using Jupyter

Open JupyterLab by clicking the Jupyter icon in the upper right corner of Immerse.

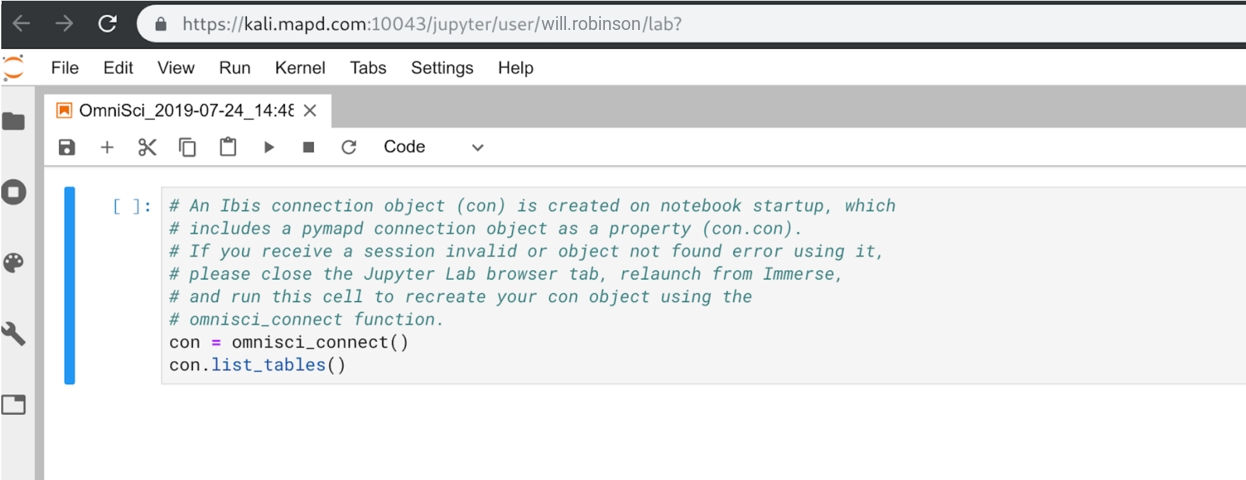

JupyterLab opens in a new tab. You are signed in automatically using HEAVY.AI authentication, with a notebook ready to start an HEAVY.AI connection. The notebook is saved for you at the root of your Jupyter file system.

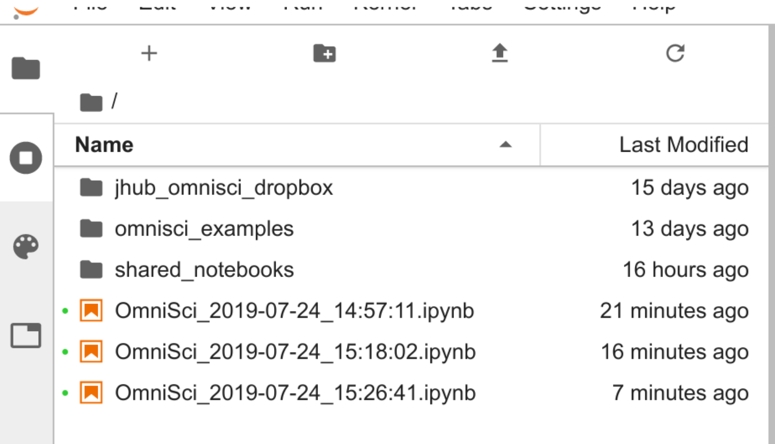

You can verify the location of your file by clicking the folder icon in the top left.

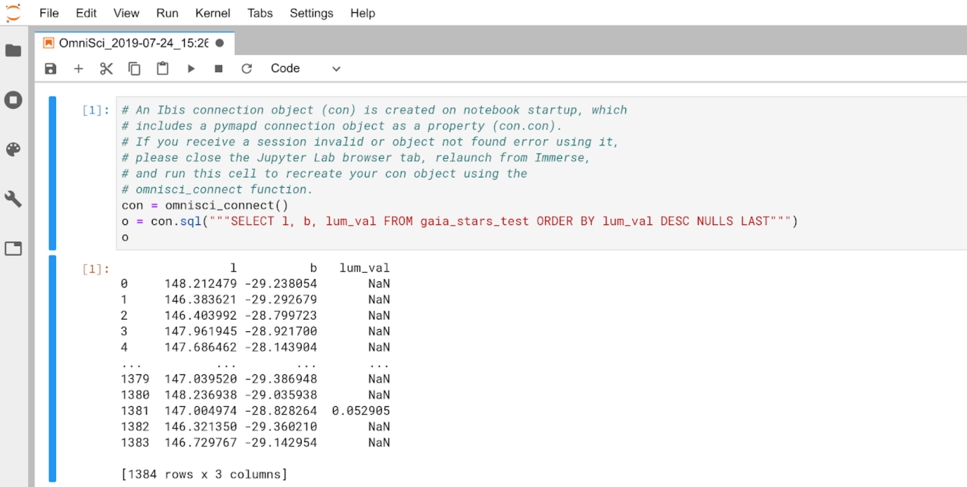

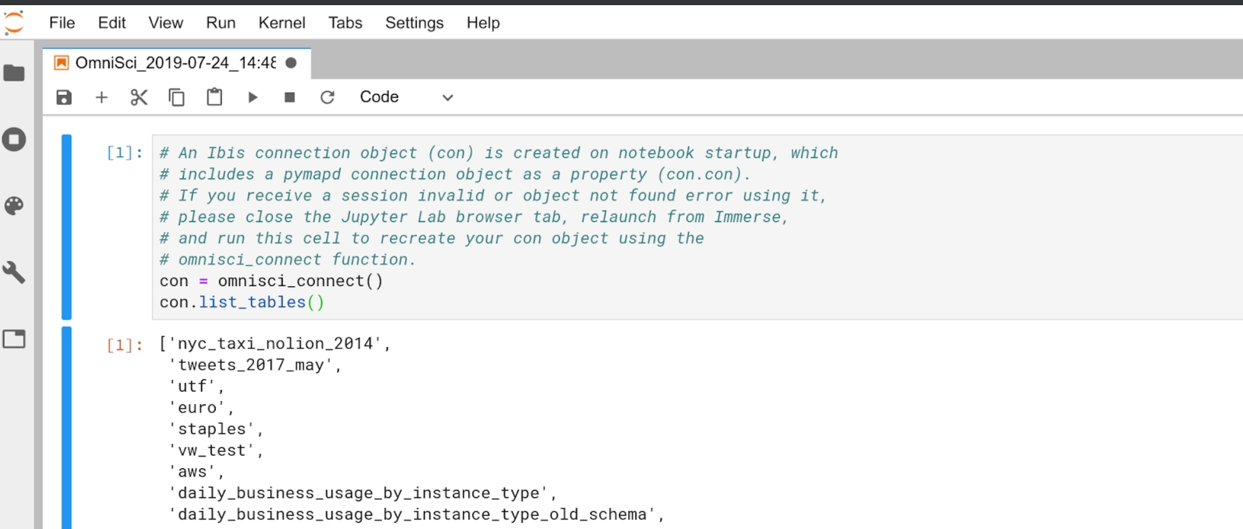

The contents of the cell are prefilled with explanatory comments and the heavyai_connect() method ready to set up your HEAVY.AI connection in Jupyter. Click the Play button to run the connection statement and list the tables in your HeavyDB instance.

You can continue to use con to run more Ibis expressions in other cells.

The connection reuses the session already in use by Heavy Immerse by passing Jupyter the raw session ID. If you connect this way without credentials, the connection has a time-to-live (TTL). After the session timeout period passes with no activity (60 minutes by default), the session is invalidated. You have to reenter Jupyter from Immerse in the same way to reestablish the connection, or use the heavyai connect method to enter your credentials manually.

You can also launch Jupyter from the Heavy Immerse SQL Editor. After you run a query in the SQL Editor, you see a button that allows you to send your query to Jupyter.

The query displays in a different notebook, ready to run the query. You must run the cell yourself to send the query and see the results.