Joins (Beta)

In Heavy Immerse, you can join columns from different tables so that you can access data across join and geojoin relations. Heavy Immerse supports two join types (left and inner). Using joins in queries provides increased performance and flexibility over cohorts since measures and dimensions can be built from columns of joined tables.

In the 7.0.0 beta version of Joins, adherence to column types is essential when performing join operations. Integer columns designated as INT are incompatible with those classified as BIGINT, and cannot be merged together.

General Process

The following scenario describes at a high level a typical Heavy Immerse join process:

You discover that data you want to chart is not available in the primary or left-hand table.

However, the data that you want is available in a different table and the two tables share a common join column.

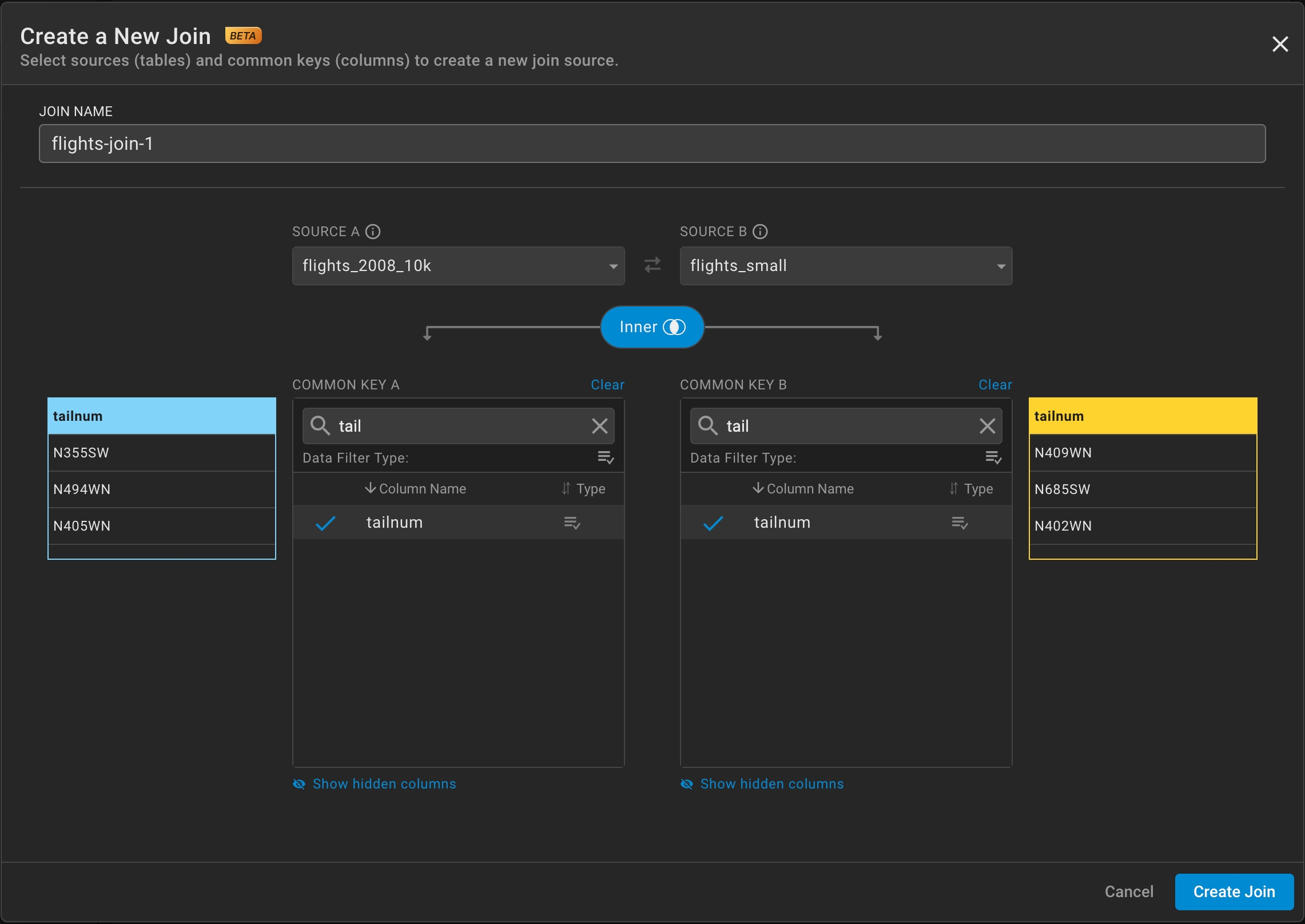

Join columns must match in both type and in contents. Immerse currently provides join recommendations based on data types and not column data contents. However, Immerse does provide column previews for you to determine suitability by inspection.

Once the join column is selected, you determine join type. This defaults to an "inner" join, which includes non-null matching records. By switching the join mode to "left" you can restrict the results to only look-ups of values which appear in the left hand table.

You need to name the join before proceeding. This name will then become the default data source for the current chart, but is also available for use in other charts within your dashboard. Joins are persisted within dashboards and therefore can be shared with others.

Joins vs. Crosslinks

Joins and crosslinks have some similarities and differences, and their use depends on your data and how you want to chart it.

A crosslink uses common columns to coordinate cross filtering behavior between charts.

A join performs a SQL command which creates a new named datasource containing columns from both tables. Dimensions and measures can be based on the combination of columns.

For example, consider the following use cases:

Join: You have flight data that contains a plane model number and other flight information, such as model number, arrival city, destination city, arrival time, and departure time. You want to combine this with a plane reference dataset that contains information about the airplane itself (model number, number of engines, year built, and so on). You can create a join from these two datasets on the model_number column, and use fields from both datasets to create your charts. For example, you could color flights based on the airplane age.

Crosslink: With the same two starting tables, you establish a crosslink on plane model number. You make a map chart of flights from the flights table and a histogram combination chart of airplane ages from the airplane characteristics table. When you select old planes on the histogram, only these planes are mapped. However, if you attempt to build add a color measure on the map, you will not have access to the plane characteristics columns (because they are not joined).

Creating a Join

Open a dashboard and select the chart type that you want to create; in this case, a Table.

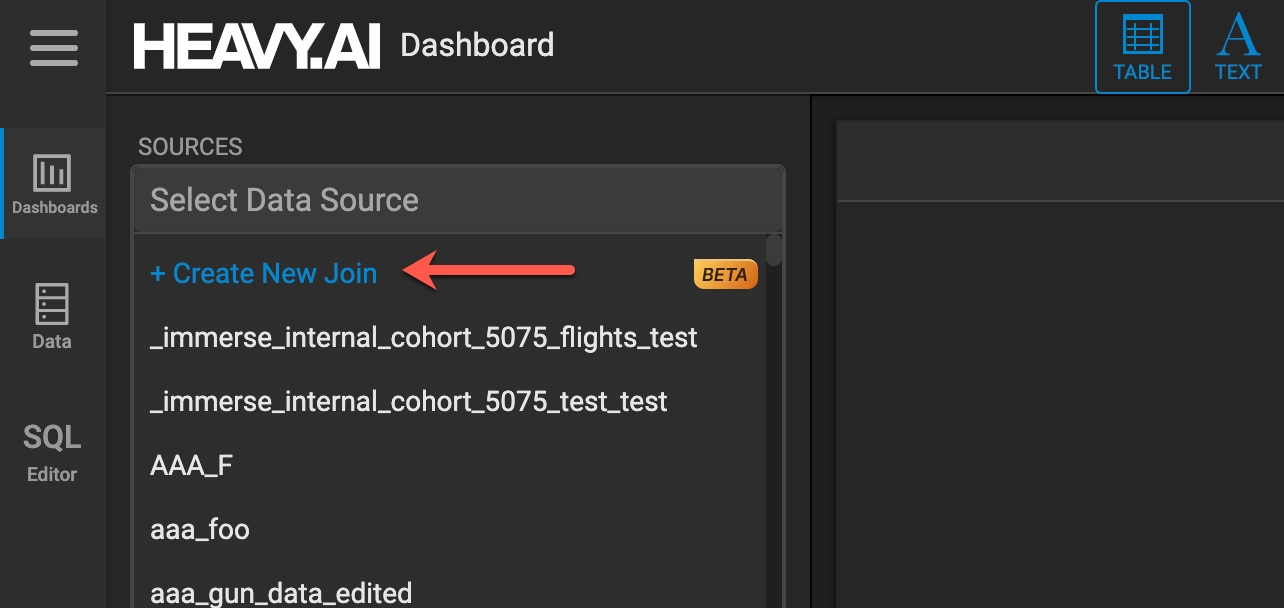

Click Create New Join.

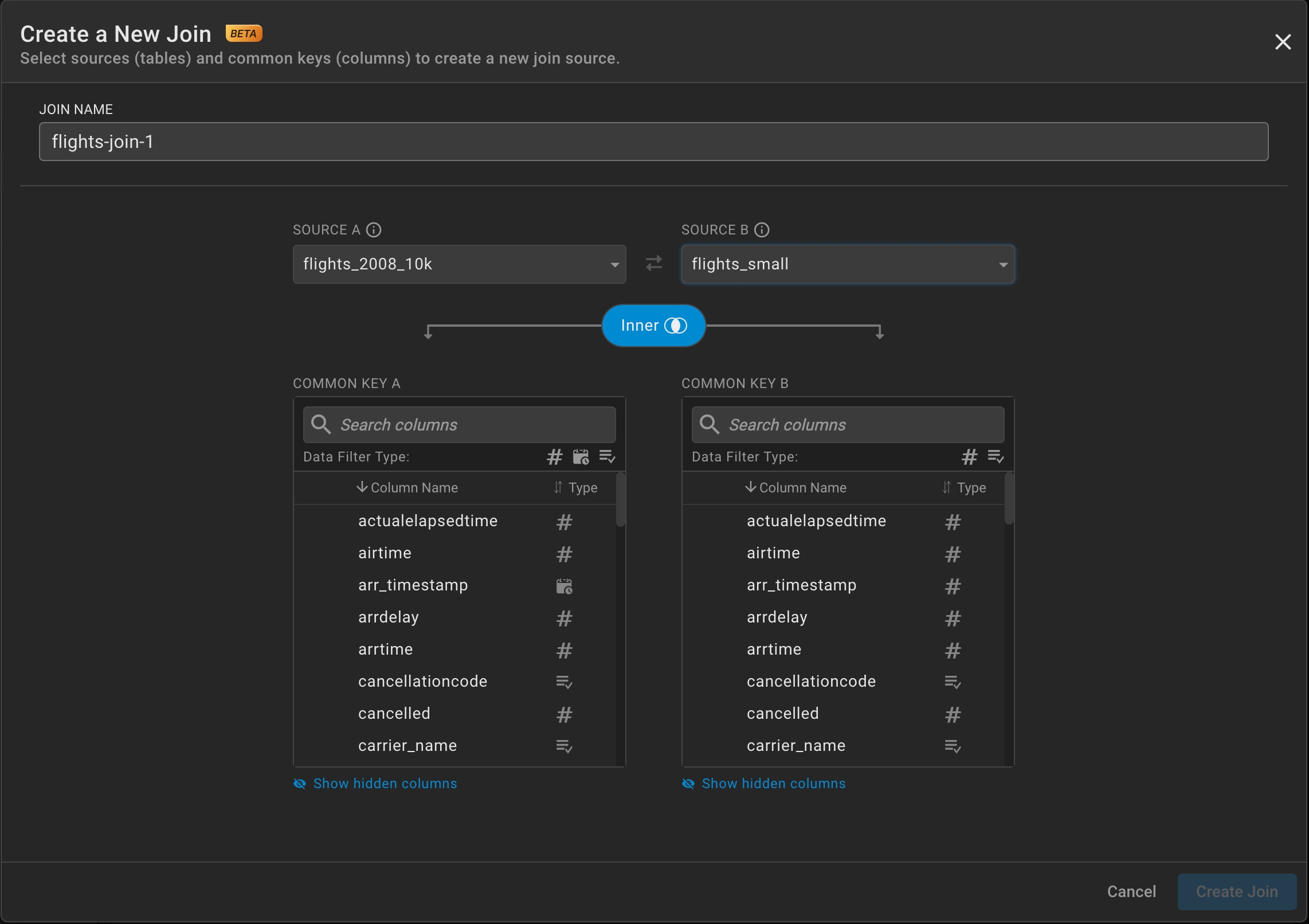

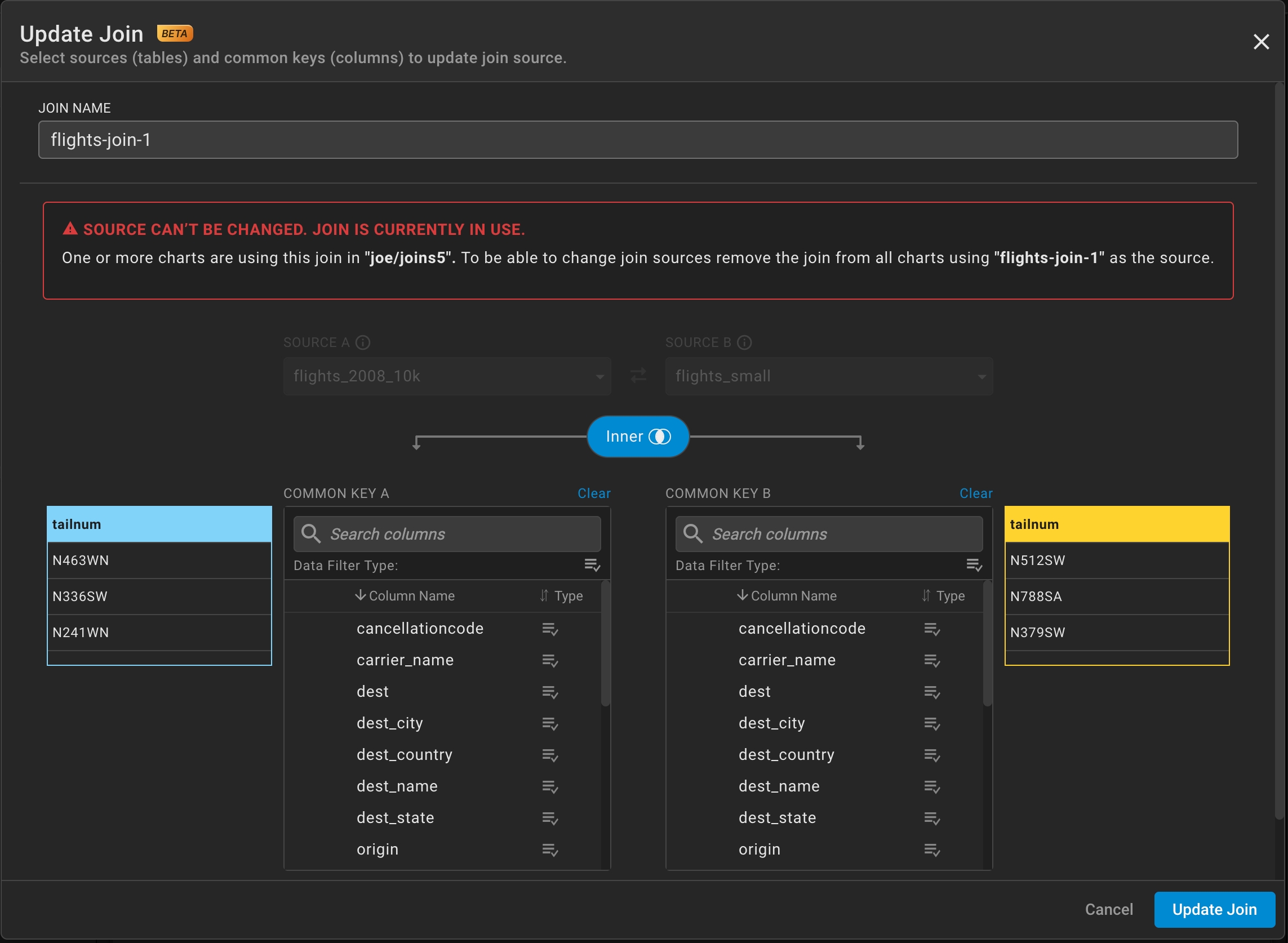

In Create a New Join, name the join (flights-join-1), select the data sources: Source A (flights_2008_10k) and Source B (flights_small), and select the join type (in this example, Inner).

Select Common Key A and Common Key B (in this case, tailnum for both) and click Create Join.

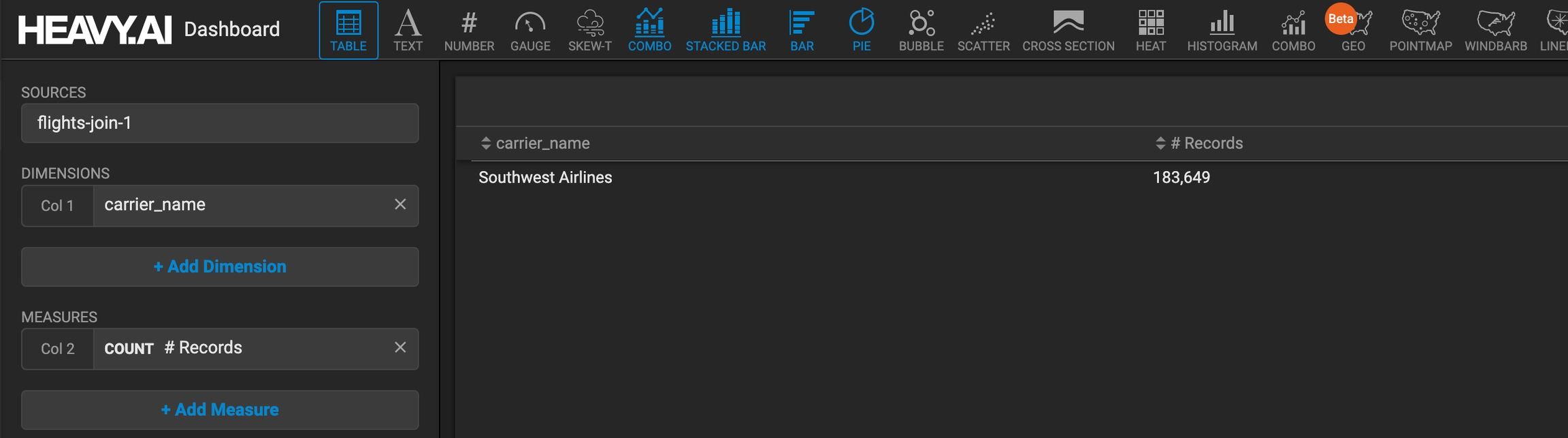

Add the dimension (carrier_name here) for column 1 and the measure (Count #Records here) for column 2 to update the Table.

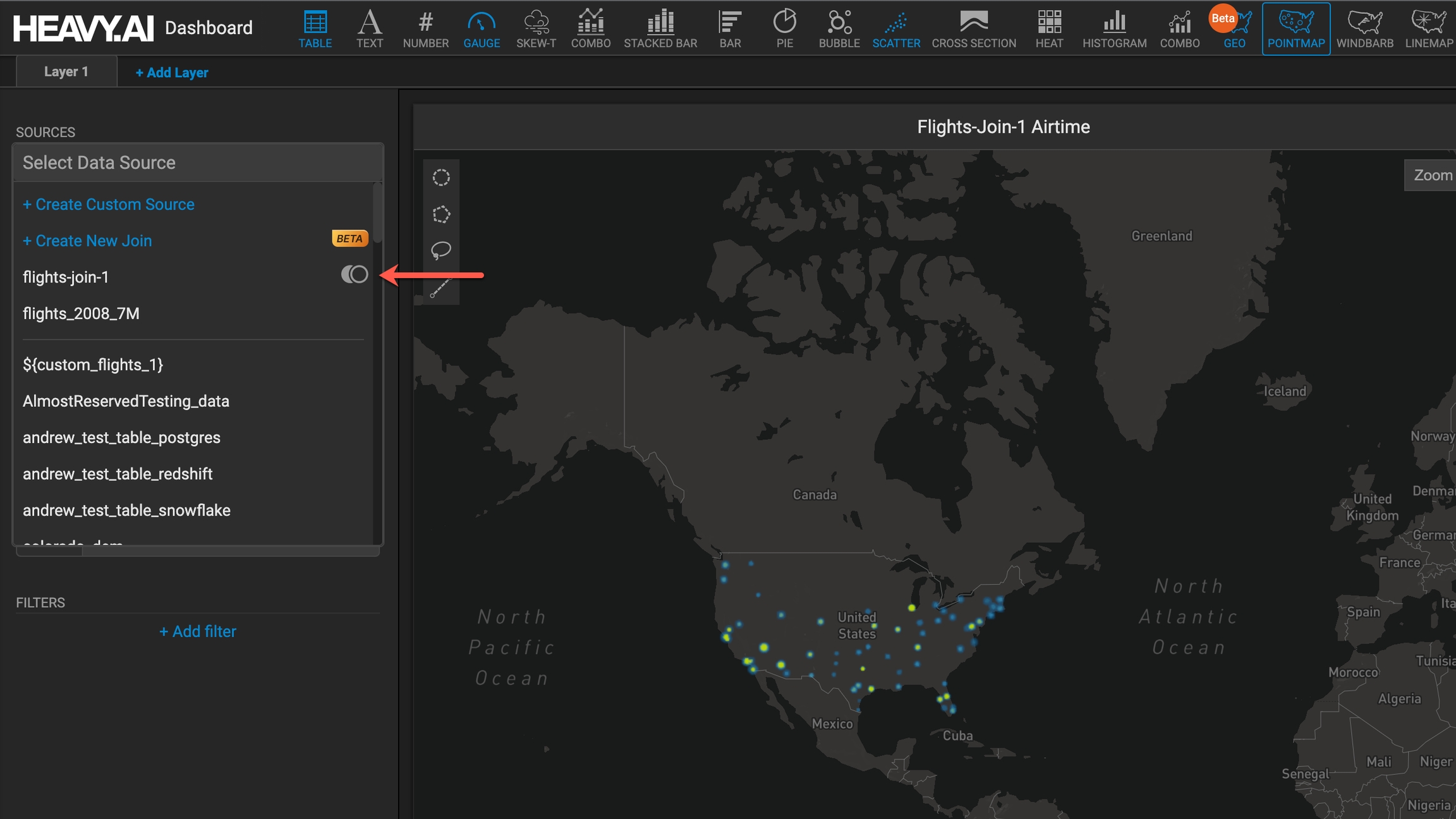

You can use the join you just created as the source for other charts; the join icon denotes a join source. Here, the Pointmap is created using the flights-join-1 source, with Lon, Lat, and Size measures defined as shown:

Edit a Join

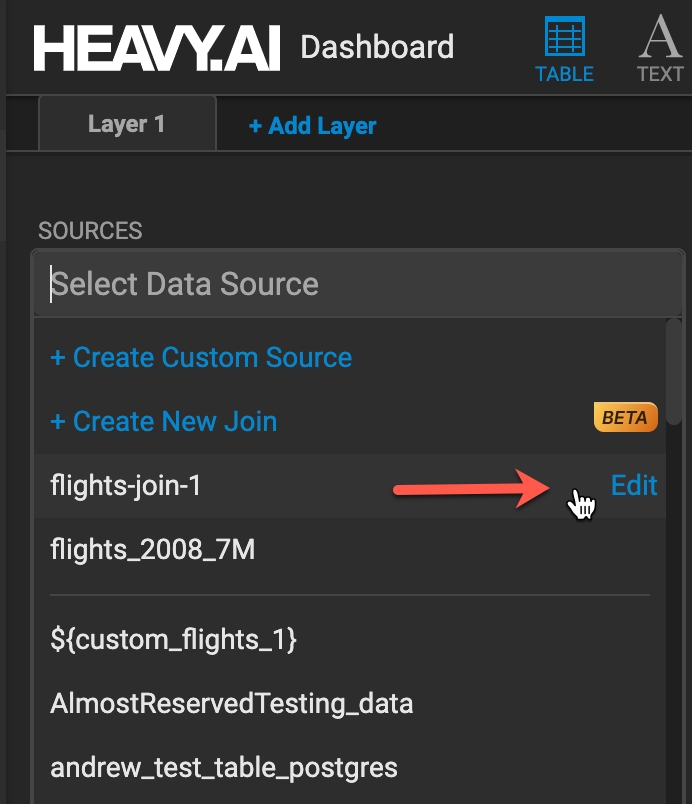

You can edit the join after you create it by hovering on the join and then clicking Edit.

Update a Join

To update the join, you must first remove it from all charts using it as the source, make your changes, and then click Update Join.

Last updated