Getting Started with Vega

Source code is located at the end of the tutorial.

This tutorial uses the same visualization as Vega at a Glance but elaborates on the runtime environment and implementation steps. The Vega usage pattern described here applies to all Vega implementations. Subsequent tutorials differ only in describing more advanced Vega features.

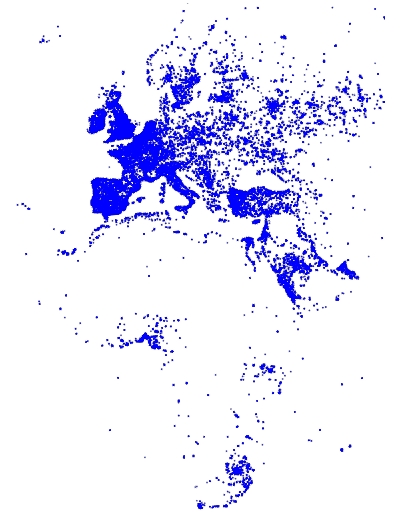

This visualization maps a continuous, quantitative input domain to a continuous output range. Again, the visualization shows tweets in the EMEA region, from a tweets data table:

Backend rendering using Vega involves the following steps:

You can create the Vega specification statically, as shown in this tutorial, or programmatically. See the Poly Map with Backend Rendering charting example for a programmatic implementation. Here is the programmatic source code:

Step 1 - Create the Vega Specification

A Vega JSON specification has the following general structure:

const exampleVega = {

width: <numeric>,

height: <numeric>,

data: [ ... ],

scales: [ ... ],

marks: [ ... ]

};Specify the Visualization Area

The width and height properties define the width and height of your visualization area, in pixels:

const exampleVega = {

width: 384,

height: 564,

data: [ ... ],

scales: [ ... ],

marks: [ ... ]

};Specify the Data Source

This example uses the following SQL statement to get the tweets data:

data: [

{

"name": "tweets",

"sql": "SELECT goog_x as x, goog_y as y, tweets_nov_feb.rowid FROM tweets_nov_feb"

}

]The input data are the latitude and longitude coordinates of tweets from the tweets_nov_feb data table. The coordinates are labeled x and y for Field Reference in the marks property, which references the data using the tweets name.

Specify the Graphical Properties of the Rendered Data Item

The marks property specifies the graphical attributes of how each data item is rendered:

marks: [

{

type: "points",

from: {

data: "tweets"

},

properties: {

x: {

scale: "x",

field: "x"

},

y: {

scale: "y",

field: "y"

},

"fillColor": "blue",

size: {

value: 3

}

}

}

]In this example, each data item from the tweets data table is rendered as a point. The points marks type includes position, fill color, and size attributes. The marks property specifies how to visually encode points according to these attributes. Points in this example are three pixels in diameter and colored blue.

Points are scaled to the visualization area using the scales property.

Specify How Input Data are Scaled to the Visualization Area

The following scales specification maps marks to the visualization area.

scales: [

{

name: "x",

type: "linear",

domain: [

-3650484.1235206556,

7413325.514451755

],

range: "width"

},

{

name: "y",

type: "linear",

domain: [

-5778161.9183506705,

10471808.487466192

],

range: "height"

}

]Both x and y scales specify a linear mapping of the continuous, quantitative input domain to a continuous output range. In this example, input data values are transformed to predefined width and height range values.

Later tutorials show how to specify data transformation using discrete domain-to-range mapping.

Step 2 - Connect to the Backend

Use the browser-connector.js renderVega() API to communicate with the backend. The connector is layered on Apache Thrift for cross-language client communication with the server.

Follow these steps to instantiate the connector and to connect to the backend:

Include

browser-connector.jslocated at https://github.com/omnisci/mapd-connector/tree/master/dist to include the MapD connector and Thrift interface APIs.<script src="<localJSdir>/browser-connector.js"></script>Instantiate the

MapdCon()connector and set the server name, protocol information, and your authentication credentials, as described in the MapD Connector API:var vegaOptions = {} var connector = new MapdCon() .protocol("http") .host("my.host.com") .port("6273") .dbName("omnisci") .user("omnisci") .password("HyperInteractive")Property

Description

dbNameOmniSci database name.

hostOmniSci web server name.

passwordOmniSci user password.

portOmniSci web server port

protocolCommunication protocol:

http,httpsuserOmniSci user name.

Finally, call the MapD connector API connect() function to initiate a connect request, passing a callback function with a

(error, success)signature as the parameter.

For example,

.connect(function(error, con) { ... });The connect() function generates client and session IDs for this connection instance, which are unique for each instance and are used in subsequent API calls for the session.

On a successful connection, the callback function is called. The callback function in this example calls the renderVega() function.

Step 3 - Make the Render Request and Handle the Response

The MapD connector API renderVega() function sends the Vega JSON to the backend, and has the following parameters:

.connect(function(error, con) {

con.renderVega(1, JSON.stringify(exampleVega), vegaOptions, function(error, result) {

if (error)

console.log(error.message);

else {

var blobUrl = `data:image/png;base64,${result.image}`

var body = document.querySelector('body')

var vegaImg = new Image()

vegaImg.src = blobUrl

body.append(vegaImg)

}

});

});Parameter

Type

Required

Description

widgetid

number

X

Calling widget ID.

options

number

Render query options.

compressionLevel:PNG compression level. 1 (low, fast) to 10 (high, slow). Default = 3

callback

function

Callback function with (error, success) signature.

Return

Description

Base64 image

PNG image rendered on server

The backend returns the rendered Base64 image in results.image, which you can display in the browser window using a data URI.

Source Code

Getting Started Directory Structure

index.html

/js

browser-connector.js

vegaspec.js

vegademo.jsHTML

Getting Started index.html

<!DOCTYPE html>

<html lang="en">

<head>

<title>OmniSci</title>

<meta charset="UTF-8">

</head>

<body>

<script src="js/browser-connector.js"></script>

<script src="js/vegaspec.js"></script>

<script src="js/vegademo.js"></script>

<script>

document.addEventListener('DOMContentLoaded', init, false);

</script>

</body>

</html>JavaScript

Getting Started vegademo.js

function init() {

var vegaOptions = {}

var connector = new MapdCon()

.protocol("http")

.host("my.host.com")

.port("6273")

.dbName("omnisci")

.user("omnisci")

.password("changeme")

.connect(function(error, con) {

con.renderVega(1, JSON.stringify(exampleVega), vegaOptions, function(error, result) {

if (error) {

console.log(error.message);

}

else {

var blobUrl = `data:image/png;base64,${result.image}`

var body = document.querySelector('body')

var vegaImg = new Image()

vegaImg.src = blobUrl

body.append(vegaImg)

}

});

});

}Getting Started vegaspec.js

const exampleVega = {

"width": 384,

"height": 564,

"data": [

{

"name": "tweets",

"sql": "SELECT goog_x as x, goog_y as y, tweets_data_table.rowid FROM tweets_data_table"

}

],

"scales": [

{

"name": "x",

"type": "linear",

"domain": [

-3650484.1235206556,

7413325.514451755

],

"range": "width"

},

{

"name": "y",

"type": "linear",

"domain": [

-5778161.9183506705,

10471808.487466192

],

"range": "height"

}

],

"marks": [

{

"type": "points",

"from": {

"data": "tweets"

},

"properties": {

"x": {

"scale": "x",

"field": "x"

},

"y": {

"scale": "y",

"field": "y"

},

"fillColor": "blue",

"size": {

"value": 3

}

}

}

]

};