Clustering Algorithms

Overview of HeavyML clustering methods

Overview

Clustering methods are important in machine learning and predictive modeling for several reasons:

Identifying patterns in data: Clustering can identify patterns or groups of similar observations in the data, which can provide insights into the underlying structure of the data and inform further analysis.

Feature engineering: Clustering can be used to identify relevant features or group related features together, which can be used to create new features for use in predictive modeling.

Outlier detection: Clustering can identify outliers or unusual observations in the data that may require further investigation or may be removed from the dataset.

Data compression: Clustering can be used to reduce the dimensionality of the data by grouping similar observations together, which can improve the efficiency of machine learning algorithms.

Overall, clustering can help to identify relationships and patterns in the data that may not be apparent from the raw data, and can inform further analysis and predictive modeling.

HEAVY.AI supports two clustering algorithms, KMeans and DBScan, via dedicated table functions for each. KMeans can be significantly more performant than DBScan, although in some cases DBScan can give better clustering results.

Clustering algorithms, particularly KMeans, can be sensitive to differences in scales between different variables. To address this, HEAVY.AI automatically normalizes input data using Z-scores (computing standard deviation from the mean).

Supported Algorithms

KMeans

KMeans is a commonly-used clustering algorithm that seeks partitions a set of observations into a user-defined number of clusters (k) by minimizing the within-cluster sum of squares. Advantages of the algorithm include the fact that it is easily understood, and that it tends to be performant relative to other clustering approaches. Disadvantages include the user needing to specify a fixed number of clusters up front, the model's assumption that clusters are always spherical, susceptibility to outliers, sensitivity to initial cluster centroid values, and the need to use input features that allow for Euclidean distance metrics.

The HeavyML implementation of KMeans automatically normalizes inputs according to the z-score of the standard deviation of each observation from the mean of the feature, allowing for use of different features with significantly different scales/ranges.

Usage

Kmeans clustering of data using HeavyML can be achieved by invoking the kmeans table function, which has the following signature.

SELECT id, cluster_id FROM TABLE(

kmeans(

data => CURSOR(SELECT <id_col>, <numeric_feature_1>, <numeric_feature2_>, ...

<numeric_feature_n> FROM <source_table>),

num_clusters => <number of clusters>,

num_iterations => <number of iterations>,

init_type => <initialization type>

)

);DBScan

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is a popular density-based clustering algorithm that groups data points based on their proximity and density. Unlike KMeans, DBSCAN does not require specifying the number of clusters up front.

Advantages of the DBScan algorithm include not needing to specify the number of clusters up front, robustness to noise (observations that do not fit in a cluster are marked as such), robustness to outliers, ability to handle clusters of arbitrary shapes (as opposed to purely spherical clusters with KMeans), and support for a variety of distance metrics beyond simple Euclidean distance measures.

Disadvantages include needing to specify the radius neighborhood and minimum points per cluster, relatively slow performance, especially with high-dimensional datasets, and difficulties identifying clusters with varying densities.

Usage

DBSCAN clustering of data using HeavyML can be achieved by invoking the dbscan table function, which has the following signature.

SELECT id, cluster_id FROM TABLE(

dbscan(

data => CURSOR(SELECT <id_col>, <numeric_feature_1>, <numeric_feature2_>, ...

<numeric_feature_n> FROM <source_table>),

epsilon => <neighborhood radius around each point>,

min_observations => <minimum number of observations (rows) per cluster>

)

);Example: Clustering Census Data

KMeans clustering can be applied to the US census block groups data which ships with the product. At simplest this would be:

SELECT

id,

cluster_id

FROM

TABLE(

kmeans(

data => CURSOR(

SELECT

FIPS,

OWNER_OCC,

AVE_FAM_SZ,

POP12_SQMI,

MED_AGE

FROM

us_census_bg

),

num_clusters => 5,

num_iterations => 10

)

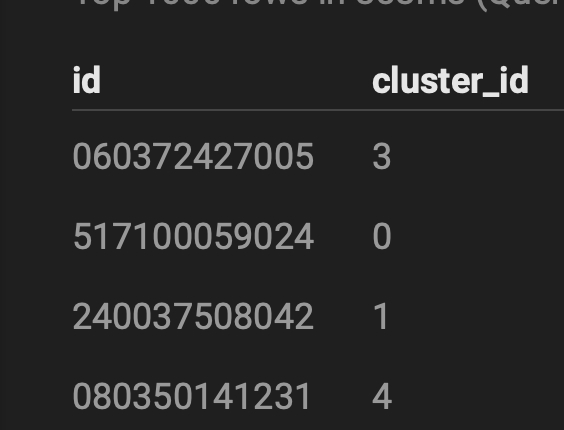

)The outer select statement here is just projecting the two minimal outputs from kmeans, which are a unique ID (in this case from the FIPS code of the census block) and a cluster_id, which is an integer from 0 to k-1.

We are invoking the table function as a source for the FROM clause, and giving it a data cursor containing the numeric columns we want to cluster on. We lead with the unique ID column FIPS as required, and the remaining columns are in arbitrary order. Note that this clustering technique does not support categorical data.

The result we get is an assignment of every census block group in the country into one of 5 classes:

A slightly more elaborate example provides a bit more context on the results and sets them up for better visualization in Immerse.

A common issue with all clustering methods is that they can be a bit "black box" by default, since no explanation is given above about which characteristics formed the clusters or how a particular census block fits within its class. So we recommend projecting the variables used in the classification:

SELECT

id,

CAST (cluster_id as TEXT) as cluster_id_str,

OWNER_OCC / CAST(HOUSEHOLDS as float) as OWNER_OCC_PCNT,

AVE_FAM_SZ,

POP12_SQMI,

MED_AGE,

omnisci_geo as block_geom

FROM

TABLE(

kmeans(

data => CURSOR(

SELECT

FIPS,

OWNER_OCC / HOUSEHOLDS as OWNER_OCC_PCNT,

AVE_FAM_SZ,

POP12_SQMI,

MED_AGE

FROM

us_census_bg

),

num_clusters => 5,

num_iterations => 10

)

),

us_census_bg

WHERE

FIPS = idIn addition to projecting the original columns and joining these back with a WHERE clause, we've done two other things.

First we normalized OWNER_OCC to OWNER_OCC_PCNT. This isn't necessary for the clustering since it does its own normalization. Here its done simply to make the variable easier to understand in the results.

Second, we CAST the cluster_ids from their default INTEGER type to TEXT. This step is for ease of visualization. Immerse currently represents integers using continuous colors but TEXT as discrete colors. Since classes are discrete, this gives us a better default color scheme.

If you build a simple visualization of the results in Immerse you will start to see some spatial patterns in the demographics which might not be apparent in maps of the individual attributes. Of course this is more true as you add additional attributes, and this technique can generally be useful in developing visualizations of deeply multidimensional data of all kinds.