Examples

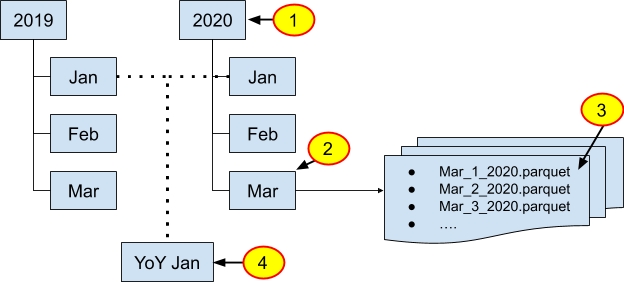

The following examples demonstrate how to use HeavyConnect using the foreign data wrappers, servers, tables, and user mappings. The examples use the following directory structure and underlying Parquet files. The highlighted numbers on the graphic correspond to the numbered examples that follow.

Details of the commands used in the examples are available in the Command Reference.

Example 1: Directory structures and refresh periods

HeavyConnect to the Parquet data organized in the year 2020. Users expect monthly updated data that has been added to existing data.

Create a custom foreign server. This server is reused in all of the examples that follow.

CREATE SERVER example_parquet_server FOREIGN DATA WRAPPER parquet_file WITH (

storage_type = 'LOCAL_FILE',

base_path = '/2020'

);2. Create the foreign table to use. In the CREATE FOREIGN TABLE statement, specify the update type and scheduling.

CREATE FOREIGN TABLE example_year_2020 (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER example_parquet_server

WITH (REFRESH_TIMING_TYPE='SCHEDULED',

REFRESH_UPDATE_TYPE='APPEND',

REFRESH_START_DATE_TIME='2020-01-31T22:30:00Z',

REFRESH_INTERVAL='31D');You can now query and build dashboards using the foreign table example_year_2020.

Example 2: Directory structures and refresh periods

HeavyConnect to the Parquet data organized in the month of March. Users expect weekly updated data that has been added to the existing data.

Reuse the foreign server created in Example 1.

Create the foreign table for use. In the CREATE FOREIGN TABLE statement, specify the update type, scheduling, and the additional file path to the month of March.

CREATE FOREIGN TABLE example_month_march (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER example_parquet_server

WITH (REFRESH_TIMING_TYPE='SCHEDULED',

REFRESH_UPDATE_TYPE='APPEND',

REFRESH_START_DATE_TIME='2020-03-01 22:30',

REFRESH_INTERVAL='7D',

FILE_PATH='Mar');You can now query and build dashboards using the foreign table example_month_march.

Example 3: Directory structures and refresh periods

HeavyConnect to the Parquet data Mar_01_2020. Users expect daily updated data that may have been edited throughout the file.

Use the foreign server you created in Example 1.

Create the foreign table for use. In the CREATE FOREIGN TABLE statement, specify the update type, scheduling, and the additional file path to the month of March.

CREATE FOREIGN TABLE example_day_march_01 (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER example_parquet_server

WITH (REFRESH_TIMING_TYPE='SCHEDULED',

REFRESH_UPDATE_TYPE='ALL',

REFRESH_START_DATE_TIME='2020-03-01 23:30',

REFRESH_INTERVAL='1D',

FILE_PATH='Mar/Mar_01_2020.parquet');You can now query and build dashboards using the foreign table example_day_march_01.

Example 4: Directory structures and refresh periods

HeavyConnect to the Parquet data of year-over-year (YoY) January data. Users manually refresh the data that has been updated throughout the file.

Create the YoY Jan directory with symlinks to the January directories.

Use the foreign server provided by HeavyDB. You do not need to create a new one.

Create the foreign table for use. In the CREATE FOREIGN TABLE statement, specify the update type, scheduling, the additional file path, and the default HeavyDB server.

CREATE FOREIGN TABLE example_yoy_january (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER default_local_parquet

WITH (REFRESH_TIMING_TYPE='manual',

REFRESH_UPDATE_TYPE='ALL',

FILE_PATH='/yoy_january');You can now query and build dashboards using the foreign table example_yoy_january.

Example 5: AWS S3 Datastore

This example provides the full workflow required to HeavyConnect to a private S3 Parquet datastore.

Create a custom S3 foreign server for the private S3 bucket.

CREATE SERVER example_S3_parquet_server FOREIGN DATA WRAPPER parquet_file WITH (

storage_type = 'AWS_S3',

base_path = '/2020',

s3_bucket = 'my-s3-bucket',

aws_region = 'us-west-1'

);2. Set up credentials for the foreign server. In the CREATE USER MAPPING statement, set the S3 access keys and S3 secret key.

CREATE USER MAPPING FOR PUBLIC SERVER example_S3_parquet_server WITH (

s3_access_key = 'xxxx',

s3_secret_key = 'xxxx'

); 3. Create the foreign table to use. In the CREATE FOREIGN TABLE statement, specify the update type and scheduling.

CREATE FOREIGN TABLE example_year_2020 (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER example_S3_parquet_server

WITH (REFRESH_TIMING_TYPE='SCHEDULED',

REFRESH_UPDATE_TYPE='APPEND',

REFRESH_START_DATE_TIME='2020-01-31 22:30',

REFRESH_INTERVAL='31D');You can now query and build dashboards using the foreign table example_year_2020.

Example 6: AWS S3 Datastore

This example provides the full workflow required to HeavyConnect to a public S3 CSV datastore that uses the S3 Select access type.

Create a custom S3 foreign server for the public S3 bucket.

CREATE SERVER example_S3_csv_server FOREIGN DATA WRAPPER delimited_file WITH (

storage_type = 'AWS_S3',

base_path = '/2020',

s3_bucket = 'my-s3-bucket',

aws_region = 'us-west-1'

);2. Set the public credentials for the foreign server, which uses the S3 Select access type. In the CREATE USER MAPPING statement, set the S3 access keys and S3 secret key.

CREATE USER MAPPING FOR PUBLIC SERVER example_S3_csv_server WITH (

s3_access_key = 'xxxx',

s3_secret_key = 'xxxx'

); 3. Create the foreign table using the S3 Select access type. In the CREATE FOREIGN TABLE statement, specify the update type and scheduling.

CREATE FOREIGN TABLE example_year_2020 (

id INTEGER,

vendor_id TEXT ENCODING DICT(32),

year_datetime TIMESTAMP(0),

year_activities_longitude DECIMAL(14,2),

year_activities_latitude DECIMAL(14,2),

precipitation SMALLINT,

snow_depth SMALLINT,

snowfall SMALLINT,

max_temperature SMALLINT,

min_temperature SMALLINT,

average_wind_speed SMALLINT)

SERVER example_S3_csv_server

WITH (REFRESH_TIMING_TYPE='SCHEDULED',

REFRESH_UPDATE_TYPE='APPEND',

REFRESH_START_DATE_TIME='2020-01-31 22:30',

REFRESH_INTERVAL='31D',

S3_ACCESS_TYPE = 'S3_SELECT');You can now query and build dashboards using the foreign table example_year_2020.

Example 7: Processing Access Logs

This example illustrates how the regex parsed file data wrapper can be used to query a local log file, which uses the Common Log Format standard. Assume that the log file has the following content:

20.182.146.93 - joe [17/Nov/2021:13:00:00 -0800] "GET /posts HTTP/1.1" 200 1000

182.226.45.18 - bob [17/Nov/2021:13:05:00 -0800] "GET /home HTTP/1.1" 200 402

0.230.116.14 - sue [17/Nov/2021:13:20:00 -0800] "GET /profile HTTP/1.1" 200 550

20.182.146.93 - joe [17/Nov/2021:13:20:00 -0800] "POST /posts/1234/comments HTTP/1.1" 500 334

20.182.146.93 - joe [17/Nov/2021:13:21:00 -0800] "POST /posts/1234/comments HTTP/1.1" 200 120

20.182.146.93 - joe [17/Nov/2021:13:30:00 -0800] "POST /posts/1235/comments HTTP/1.1" 200 89

182.226.45.18 - bob [17/Nov/2021:13:31:00 -0800] "GET /posts HTTP/1.1" 200 1000

0.230.116.14 - sue [17/Nov/2021:13:31:00 -0800] "GET /posts HTTP/1.1" 200 1000

0.230.116.14 - sue [17/Nov/2021:13:35:00 -0800] "POST /posts/1234/comments HTTP/1.1" 200 49

20.182.146.93 - joe [17/Nov/2021:13:40:00 -0800] "POST /posts/1234/comments HTTP/1.1" 200 100Create a foreign table that extracts all the fields in the logs:

CREATE FOREIGN TABLE access_logs (

ip_address TEXT,

user_id TEXT,

log_timestamp TIMESTAMP,

http_method TEXT,

endpoint TEXT,

http_status SMALLINT,

response_size BIGINT

) SERVER default_local_regex_parsed

WITH (file_path = '/logs/sample.log',

line_regex = '^(\d+\.\d+\.\d+\.\d+)\s+\-\s+(\w+)\s+\[([^\]]+)\]\s+\"(\w+)\s+([^\s]+)\s+HTTP\/1\.1"\s+(\d+)\s+(\d+)$');

The table can now be queried using a HeavyDB client as normal:

SELECT * FROM access_logs WHERE http_status != 200;

ip_address |user_id|log_timestamp |http_method|endpoint |http_status|response_size

20.182.146.93|joe |2021-11-17 21:20:00|POST |/posts/1234/comments|500 |334Example 8: Processing Multi-Line Access Logs

The above example shows how a log file can be queried using the regex parsed file data wrapper. However, in certain cases, log messages may span multiple lines. In such a case, a "line_start_regex" option can be used to indicate the start of a new entry.

Assume that the log file has the following content with some entries spanning multiple lines:

20.182.146.93 - joe [17/Nov/2021:13:00:00 -0800] "GET /posts

HTTP/1.1" 200 1000

182.226.45.18 - bob

[17/Nov/2021:13:05:00 -0800] "GET /home HTTP/1.1" 200 402

0.230.116.14 - sue [17/Nov/2021:13:20:00 -0800] "GET /profile HTTP/1.1" 200 550

20.182.146.93 - joe [17/Nov/2021:13:20:00 -0800] "POST /posts/1234/comments HTTP/1.1" 500 334

20.182.146.93 - joe [17/Nov/2021:13:21:00 -0800] "POST

/posts/1234/comments HTTP/1.1" 200 120

20.182.146.93 - joe

[17/Nov/2021:13:30:00 -0800]

"POST /posts/1235/comments HTTP/1.1"

200

89

182.226.45.18 - bob [17/Nov/2021:13:31:00 -0800] "GET /posts HTTP/1.1" 200 1000

0.230.116.14 - sue [17/Nov/2021:13:31:00 -0800]

"GET /posts HTTP/1.1" 200

1000

0.230.116.14 - sue [17/Nov/2021:13:35:00 -0800] "POST /posts/1234/comments HTTP/1.1" 200 49

20.182.146.93 - joe [17/Nov/2021:13:40:00 -0800] "POST /posts/1234/comments HTTP/1.1"

200 100Create a foreign table that extracts all the fields in the logs:

CREATE FOREIGN TABLE access_logs (

ip_address TEXT,

user_id TEXT,

log_timestamp TIMESTAMP,

http_method TEXT,

endpoint TEXT,

http_status SMALLINT,

response_size BIGINT

) SERVER default_local_regex_parsed

WITH (file_path = '/logs/sample.log',

line_regex = '^(\d+\.\d+\.\d+\.\d+)\s+\-\s+(\w+)\s+\[([^\]]+)\]\s+\"(\w+)\s+([^\s]+)\s+HTTP\/1\.1"\s+(\d+)\s+(\d+)$',

line_start_regex = '^(\d+\.\d+\.\d+\.\d+)');The table can now be queried using a HeavyDB client as normal:

SELECT * FROM access_logs WHERE http_status != 200;

ip_address |user_id|log_timestamp |http_method|endpoint |http_status|response_size

20.182.146.93|joe |2021-11-17 21:20:00|POST |/posts/1234/comments|500 |334

Example 9: PostgreSQL Access Using an ODBC DSN (Beta)

This example illustrates how the ODBC data wrapper can be used to access data residing in a PostgreSQL RDMS.

Download the PostgreSQL ODBC driver (see https://odbc.postgresql.org/ for more details. Alternatively, this can be done via an Operating System package manager).

Add an

/etc/odbc.inifile with the following configuration:

[ODBC Data Sources]

postgres_db_1=postgres_db_1

[postgres_db_1]

Description=Local PostgreSQL database

Driver=/usr/lib/odbc-drivers/libpgodbc.so ; Symlink to downloaded driver .so file

Database=postgres

Servername=localhost

Port=5432

Alternatively, the above configuration can be put in an .odbc.ini file in the home directory (i.e. ~/.odbc.ini), if the server process is started under a specific user account.

3. Create a custom ODBC foreign server for the PostgreSQL database:

CREATE SERVER my_postgres_server FOREIGN DATA WRAPPER odbc

WITH (data_source_name = 'postgres_db_1');4. Set the credentials for the foreign server using a user mapping:

CREATE USER MAPPING FOR PUBLIC SERVER my_postgres_server

WITH (username = 'username', password = 'password');5. Create a foreign table that references the above server:

CREATE FOREIGN TABLE example_table (device_id INTEGER, message TEXT, event_timestamp TIMESTAMP)

SERVER my_postgres_server

WITH (sql_select = 'SELECT * FROM remote_postgres_table WHERE event_timestamp > $$2020-01-01$$;',

sql_order_by = 'event_timestamp');You can now query the foreign table as normal.

For more information, see ODBC Data Wrapper Reference.

Example 10: PostgreSQL Access Using ODBC Connection String (Beta)

The above example shows how an RDMS can be accessed using an ODBC configuration file that resides in the server. System administrator might not know and configure all possible RDMS databases that users want to use; the ODBC data wrapper provides an alternative of setting the configuration for remote RDMS databases using a connection string.

In this example, assume that data needs to be fetched from another PostgreSQL database called "my_postgres_db", which is on a server with hostname "my_postgres.example.com" running on port 1234.

Download the PostgreSQL ODBC driver (and drivers for all types of RDMS users are expected to use).

Add an

/etc/odbcinst.inifile with configurations for all drivers:

[ODBC Drivers]

PostgreSQL=Installed

Redshift=Installed

Snowflake=Installed

[PostgreSQL]

Description=PostgreSQL ODBC driver

Driver=/usr/lib/odbc-drivers/libpgodbc.so ; Symlink to downloaded driver .so file

[Redshift]

Description=Redshift ODBC driver

Driver=/usr/lib/odbc-drivers/libredshiftodbc.so ; Symlink to downloaded driver .so file

[Snowflake]

Description=Snowflake ODBC Driver

Driver=/usr/lib/odbc-drivers/libsnowflakeodbc.so ; Symlink to downloaded driver .so file3. Create a custom ODBC foreign server for the PostgreSQL database:

CREATE SERVER my_postgres_server FOREIGN DATA WRAPPER odbc

WITH (connection_string = 'Driver=PostgreSQL;Database=my_postgres_db;Servername=my_postgres.example.com;Port=1234');4. Set the credentials for the foreign server using a user mapping:

CREATE USER MAPPING FOR PUBLIC SERVER my_postgres_server

WITH (credential_string = 'Username=username;Password=password'); 5. Create a foreign table that references the above server:

CREATE FOREIGN TABLE example_table (device_id INTEGER, message TEXT, event_timestamp TIMESTAMP)

SERVER my_postgres_server

WITH (sql_select = 'SELECT * FROM remote_postgres_table WHERE event_timestamp > $$2020-01-01$$;',

sql_order_by = 'event_timestamp'); You can now query the foreign table as normal.

For more information, see ODBC Data Wrapper Reference.