HEAVY.AI Installation on RHEL

This is an end-to-end recipe for installing HEAVY.AI on a Red Hat Enterprise 8.x machine using CPU and GPU devices.

The order of these instructions is significant. To avoid problems, install each component in the order presented.

Assumptions

These instructions assume the following:

You are installing a "clean" Rocky Linux / RHEL 8 host machine with only the operating system.

Your HEAVY.AI host only runs the daemons and services required to support HEAVY.AI.

Your HEAVY.AI host is connected to the Internet.

Preparation

Prepare your machine by updating your system and optionally enabling or configuring a firewall.

Update and Reboot

Update the entire system and reboot the system if needed.

sudo dnf -y update

sudo rebootInstall the utilities needed to create HEAVY.AI repositories and download installation binaries.

sudo dnf -y install dnf-utils curl libldap2-devJDK

Follow these instructions to install a headless JDK and configure an environment variable with a path to the library. The “headless” Java Development Kit does not provide support for keyboard, mouse, or display systems. It has fewer dependencies and is best suited for a server host. For more information, see https://openjdk.java.net.

Open a terminal on the host machine.

Install the headless JDK using the following command:

sudo dnf -y install java-1.8.0-openjdk-headlessCreate the HEAVY.AI User

Create a group called heavyai and a user named heavyai, who will own HEAVY.AI software and data on the file system.

You can create the group, user, and home directory using the useradd command with the --user-group and --create-home switches:

sudo useradd --user-group --create-home --groups wheel heavyaiSet a password for the user using the passwd command.

sudo passwd heavyaiLog in with the newly created user.

sudo su - heavyaiInstallation

There are two ways to install the heavy.ai software

DNF Installation To install software using DNF's package manager, you can utilize DNF's package management capabilities to search for and then install the desired software. This method provides a convenient and efficient way to manage software installations and dependencies on your system.

Tarball Installation Installing via a tarball involves obtaining a compressed archive file (tarball) from the software's official source or repository. After downloading the tarball, you would need to extract its contents and follow the installation instructions provided by the software developers. This method allows for manual installation and customization of the software.

Install NVIDIA Drivers ᴳᴾᵁ ᴼᴾᵀᴵᴼᴺ

If your system includes NVIDIA GPUs but the drivers are not installed, it is advisable to install them before proceeding with the suite installation.

See Install NVIDIA Drivers and Vukan on Rocky Linux and RHEL for details.

Installing with DNF

Create a DNF repository depending on the edition (Enterprise, Free, or Open Source) and execution device (GPU or CPU) you will use.

sudo dnf config-manager --add-repo \

https://releases.heavy.ai/ee/yum/stable/cudaAdd the GPG-key to the newly added repository.

sudo dnf config-manager --save \

--setopt="releases.heavy*.gpgkey=https://releases.heavy.ai/GPG-KEY-heavyai"Use DNF to install the latest version of HEAVY.AI.

sudo dnf -y install heavyai.x86_64Installing with a Tarball

Let's begin by creating the installation directory.

sudo mkdir /opt/heavyai && sudo chown $USER /opt/heavyaiDownload the archive and install the latest version of the software. The appropriate archive is downloaded based on the edition (Enterprise, Free, or Open Source) and the device used for runtime.

curl \

https://releases.heavy.ai/ee/tar/heavyai-ee-latest-Linux-x86_64-render.tar.gz \

| sudo tar zxf - --strip-components=1 -C /opt/heavyaiConfiguration

Follow these steps to configure your HEAVY.AI environment.

Set Environment Variables

For your convenience, you can update .bashrc with these environment variables

echo "# HEAVY.AI variable and paths

export HEAVYAI_PATH=/opt/heavyai

export HEAVYAI_BASE=/var/lib/heavyai

export HEAVYAI_LOG=\$HEAVYAI_BASE/storage/log

export PATH=\$HEAVYAI_PATH/bin:$PATH" \

>> ~/.bashrc

source ~/.bashrcAlthough this step is optional, you will find references to the HEAVYAI_BASE and HEAVYAI_PATH variables. These variables contain the paths where configuration, license, and data files are stored and the location of the software installation. It is strongly recommended that you set them up.

Initialization

Run the script that will initialize the HEAVY.AI services and database storage located in the systemd folder.

cd $HEAVYAI_PATH/systemd

./install_heavy_systemd.shAccept the default values provided or make changes as needed.

The script creates a data directory in $HEAVYAI_BASE/storage (typically /var/lib/heavyai) with the directories catalogs, dataand log, which will contain the metadata, the data of the database tables, and the log files from Immerse's web server and the database.

The log folder is particularly important for database administrators. It contains data about the system's health, performance, and user activities.

Activation

The first step to activate the system is starting HeavyDB and the Web Server service that Heavy Immerse needs. ¹

Start the services and enable the automatic startup of the service at reboot and start the HEAVY.AI services.

sudo systemctl enable heavydb --now

sudo systemctl enable heavy_web_server --nowConfigure the Firewall ᴼᴾᵀᴵᴼᴺᴬᴸ

If a firewall is not already installed and you want to harden your system, install and start firewalld.

sudo dnf -y install firewalld

sudo systemctl start firewalld

sudo systemctl enable firewalld

sudo systemctl status firewalldTo use Heavy Immerse or other third-party tools, you must prepare your host machine to accept incoming HTTP(S) connections. Configure your firewall for external access:

sudo firewall-cmd --zone=public --add-port=6273-6274/tcp --add-port=6278/tcp --permanent

sudo firewall-cmd --reloadFor more information, see https://fedoraproject.org/wiki/Firewalld?rd=FirewallD.

Licensing HEAVY.AI ᵉᵉ⁻ᶠʳᵉᵉ ᵒⁿˡʸ

If you are on Enterprise or Free Edition, you need to validate your HEAVY.AI instance with your license key. You can skip this section if you are using Open Source Edition. ²

Copy your license key from the registration email message. If you have not received your license key, contact your Sales Representative or register for your 30-day trial here.

Connect to Heavy Immerse using a web browser connected to your host machine on port 6273. For example,

http://heavyai.mycompany.com:6273.When prompted, paste your license key in the text box and click Apply.

Log into Heavy Immerse by entering the default username (

admin) and password (HyperInteractive), and then click Connect.

The $HEAVYAI_BASE directory must be dedicated to HEAVYAI; do not set it to a directory shared by other packages.

Final Checks

To verify that everything is working, load some sample data, perform a heavysql query, and generate a Pointmap using Heavy Immerse. ¹

Load Sample Data and Run a Simple Query

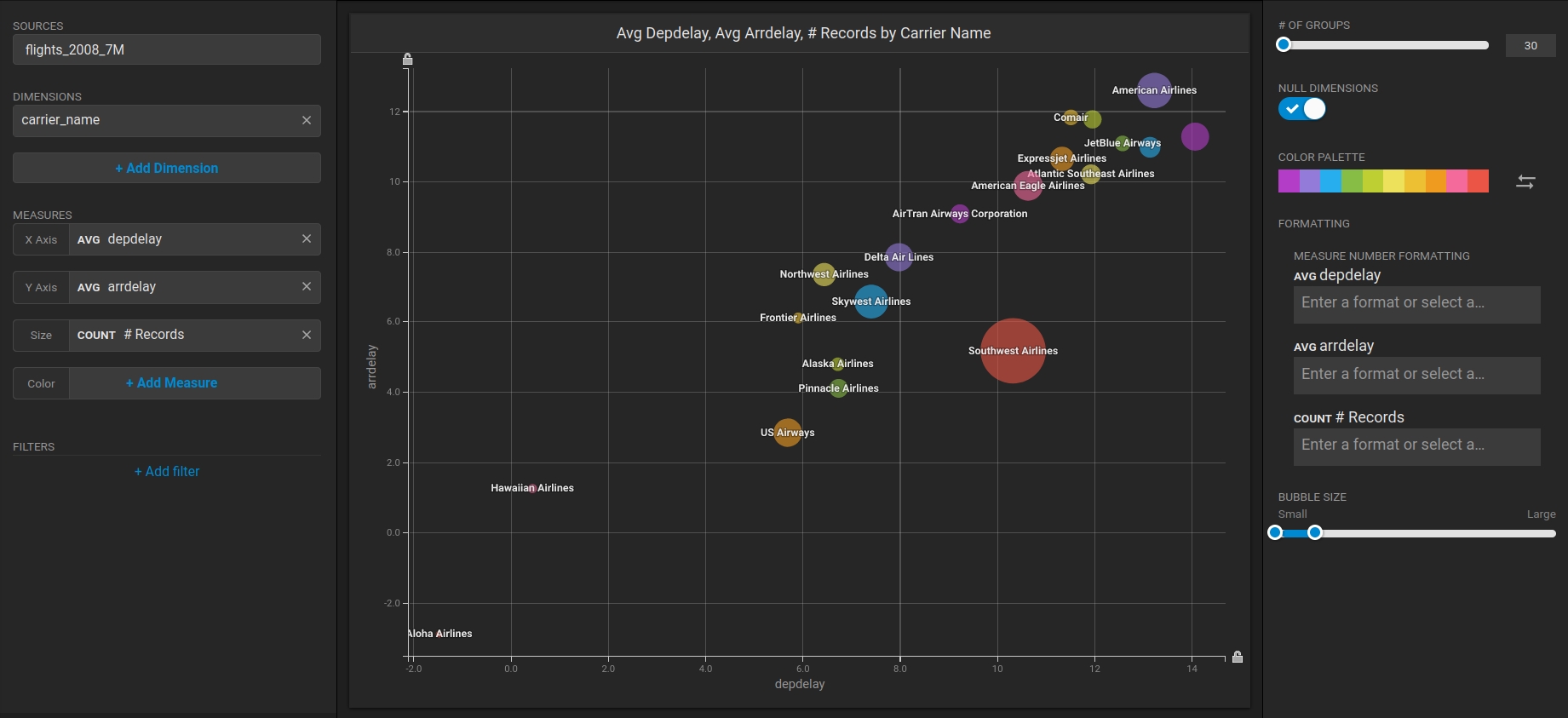

HEAVY.AI ships with two sample datasets of airline flight information collected in 2008, and a census of New York City trees. To install sample data, run the following command.

cd $HEAVYAI_PATH

sudo ./insert_sample_data --data /var/lib/heavyai/storage# Enter dataset number to download, or 'q' to quit:

Dataset Rows Table Name File Name

1) Flights (2008) 7M flights_2008_7M flights_2008_7M.tar.gz

2) Flights (2008) 10k flights_2008_10k flights_2008_10k.tar.gz

3) NYC Tree Census (2015) 683k nyc_trees_2015_683k nyc_trees_2015_683k.tar.gzConnect to HeavyDB by entering the following command in a terminal on the host machine (default password is HyperInteractive):

$HEAVYAI_PATH/bin/heavysql -p HyperInteractiveanEnter a SQL query such as the following:

SELECT origin_city AS "Origin",

dest_city AS "Destination",

AVG(airtime) AS "Average Airtime"

FROM flights_2008_10k WHERE distance < 175

GROUP BY origin_city, dest_city;The results should be similar to the results below.

Origin|Destination|Average Airtime

Austin|Houston|33.055556

Norfolk|Baltimore|36.071429

Ft. Myers|Orlando|28.666667

Orlando|Ft. Myers|32.583333

Houston|Austin|29.611111

Baltimore|Norfolk|31.714286Create a Dashboard Using Heavy Immerse ᵉᵉ⁻ᶠʳᵉᵉ ᵒⁿˡʸ ¹

After installing Enterprise or Free Edition, check if Heavy Immerse is running as intended.

Connect to Heavy Immerse using a web browser connected to your host machine on port 6273. For example,

http://heavyai.mycompany.com:6273.Log into Heavy Immerse by entering the default username (

admin) and password (HyperInteractive), and then click Connect.

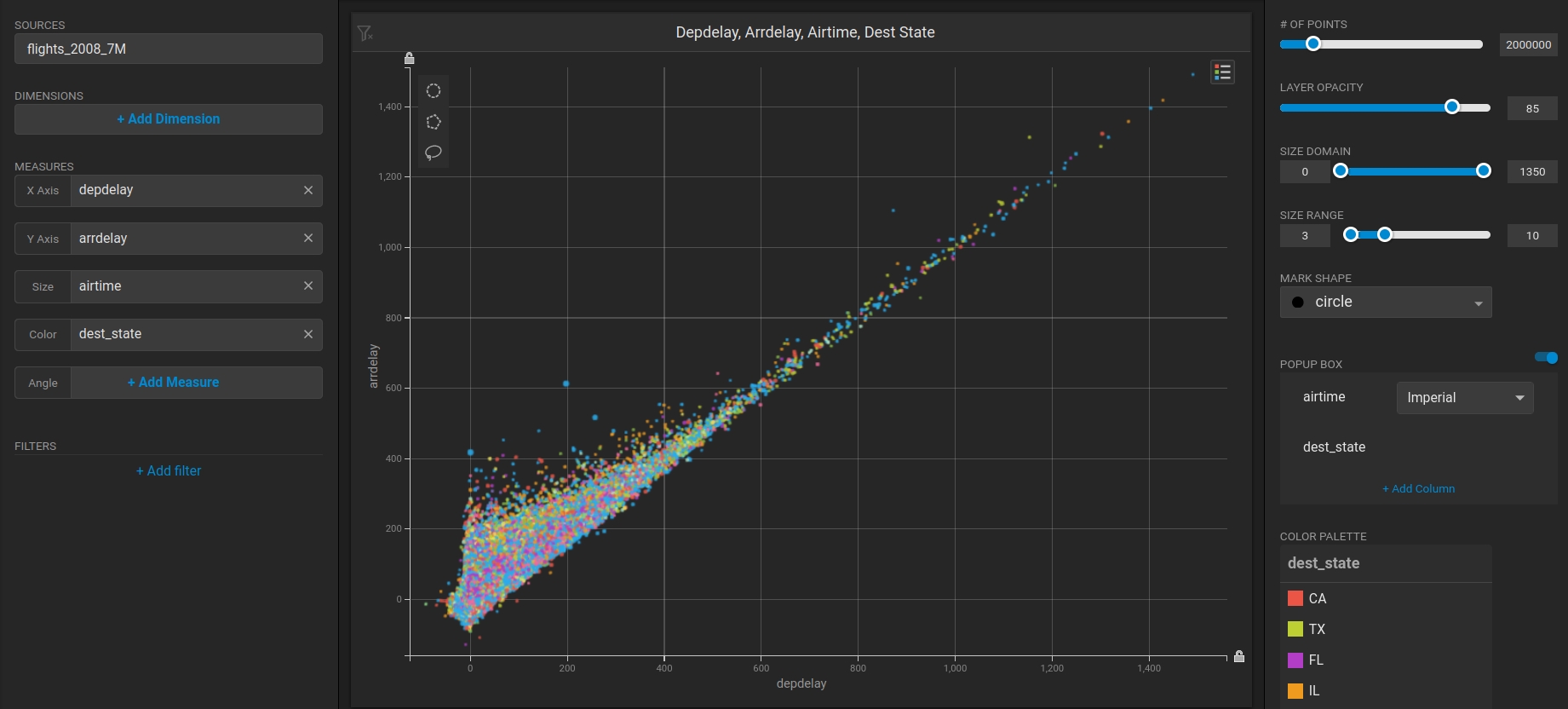

Create a new dashboard and a Scatter Plot to verify that backend rendering is working.

Click New Dashboard.

Click Add Chart.

Click SCATTER.

Click Add Data Source.

Choose the flights_2008_10k table as the data source.

Click X Axis +Add Measure.

Choose depdelay.

Click Y Axis +Add Measure.

Choose arrdelay.

Click Size +Add Measure.

Choose airtime.

Click Color +Add Measure.

Choose dest_state.

The resulting chart clearly demonstrates that there is a direct correlation between departure delay and arrival delay. This insight can help in identifying areas for improvement and implementing strategies to minimize delays and enhance overall efficiency.

¹ In the OS Edition, Heavy Immerse is unavailable.

² The OS Edition does not require a license key.

Last updated